Dividing is an infant’s first attempt to make sense of the world. When presented the first time ever with the mother’s milk, before the sweet and warm sensations, the baby must first divide the self from the non-self (is this internal part of me or external?), followed immediately by the divide between the harmful and the nutritious (should I take or kick it?). Only after the familiar sound and smell of the mother encourage the baby to take a leap of faith to land on the “external/nutritious” classification quadrant, the baby gets the chance to taste the warmth and sweetness of the milk.

Dividing continues to be the most successful tool for humans to understand and change the world. Bodies are divided into systems, systems into organs, organs into organisms, organisms into molecules, molecules into atoms, atoms into nucleus and electrons… products are divided into assemblies, components and parts, complexities are divided into conquerable simplicities…

Even among humans where we’re supposedly to shed the habit of dividing and unite in humanity, dividing seems to work better. Donald Trump won the second term by dividing. United State continues to grow the NATO division of the Western Bloc, even though the Eastern Block has long disintegrated with the collapse of the Soviet Union. Then there were various theories or models dividing the cold war world into three worlds.

Today I’m going to follow the Maoist Three Worlds Theory and divide the enterprise AI/ML practices into three worlds.

The three worlds division

A generic characterization of the three worlds division for any industry can be as below:

| Industry Players | First World | Second World | Third World |

|---|---|---|---|

| Driven By | Mission | Value | Hype |

| Institutional Persona | Innovators, Creators | Builders | Users, Buyers, Imitators, Pretenders, Adaptors |

| Value Add | Original contributions to human knowledge and technology | Original applications of first world knowledge and technology to institutional challenges from the first principles (Ab Initio) | Derivative and possibly wasteful applications of first and second world artifacts and practices to institutional challenges |

| Examples | Quantum computing (Google, IBM), Transformer (Google), VR/AR (Meta), Communication (Huawei), Space (SpaceX) | Recommendation System (Netflix), Drone (DJI) | Most GenAI use cases around you |

Specific to AI/ML domain:

- The First World of AI/ML comprises only Google and Meta, the two AI super powers. They both have tons of dirty money from the Ads, the unmatched deep reservoir of talents with golden handcuffs, and the stable cultural and market environment for sustained innovations.

- The Second World of AI/ML comprises the likes of Apple, Microsoft and OpenAI, the AI powers. Their power derives not necessarily from their AI/ML prowess per se but the direct touch their products has with consumers (2C) and businesses (2B) and the values they may create for them out of AI/ML. As an example, AWS belongs to the Second World because of the B2B carrier role it plays, even though it has no human facing products assimilating AI and is not innovative enough to contribute anything to the field. Netflix and Pinterest are other example second world AI players, as they’re building better user experiences with up-to-date AI and contributing to the field by publishing their works.

- The Third World of AI/ML comprises the rest, the underdeveloped, unaligned[1], uninitiated and the uninvolved. Majority of the fortune 500 including familiar names such as Home Depot and IBM are in the third world of AI.

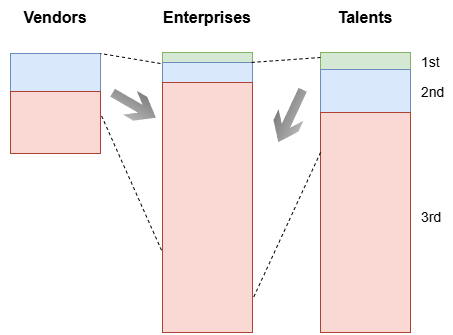

Yes, you “hear” me right: with the purported proportions shown above, I contend that 95% of the enterprises are in the Third World of AI/ML practice.

The Eight Commandments of AI/ML practice

I am the Trust Me thy Bro.

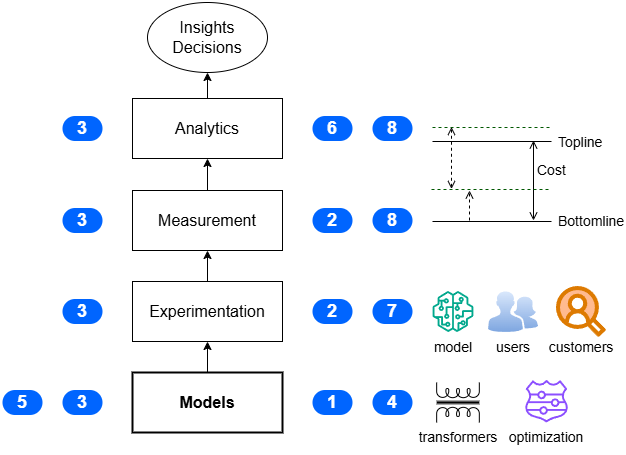

- Thou shall have the capacity to architecture and train representation learning (e.g., twin-tower in recommendation system, transformer in language modeling) deep neural network models from scratch on your private data. Imitations such as downloading from Hugging Face and fine-tuning a vendor or open-source model doesn’t count.

- Thou shall not deploy a model to production before satisfactory online testing measured with business-oriented metrics.

- Thou shall be Code-First. Thou shall not license and make use of low or no-code AI/ML solutions such as H2O, Dataiku or KNIME.

- Thou shall have an AI/ML platform consists of at least model design and run-time ideally covering end-to-end model lifecycle.

- Thou shall have a DevOps practice with data, feature and model pipelines in place for automation.

- Thou shall have Ph.D. level talents conducting causal analytics for scientific decisions.

- Thou shall have a rapid application development framework and runtime environment in place for self-service last-mile activation and deliveries. Thou shall support web app, APIs and batch jobs.

- Thou shall have a cost and value metrics framework and system in place to conduct cost-benefits analysis for AI/ML use cases.

You shall set up these practices, which I command you today, on this random day.

The gestalt of the Eight Commandments

The structural and functional organization (gestalt) of a functioning AI/ML ecosystem center around the model building and evaluation chain, with an emphasis on the evaluative part. Why? Because too many models are built to no beneficial business effect!

What’s so special about representation (a.k.a. embeddings)?

I’m on my third read of Owen Barfield’s “Saving the Appearances: A Study in Idolatry” in which he traces the evolution of human consciousness across some three thousand years of history. He opens the book with a discussion on rainbows, which don’t physically exist independent of optical observers such as human or camera. If you were the first cave man who first saw a rainbow, how do you know it’s not a hallucination? You turn around to your fellow cavemen and saw they were pointing to and excited about the same “thing”. Barfield calls that thing “representation”, the figuration of a phenomenon on a human mind. When a representation is shared among many humans, it’s the “collective representation”.

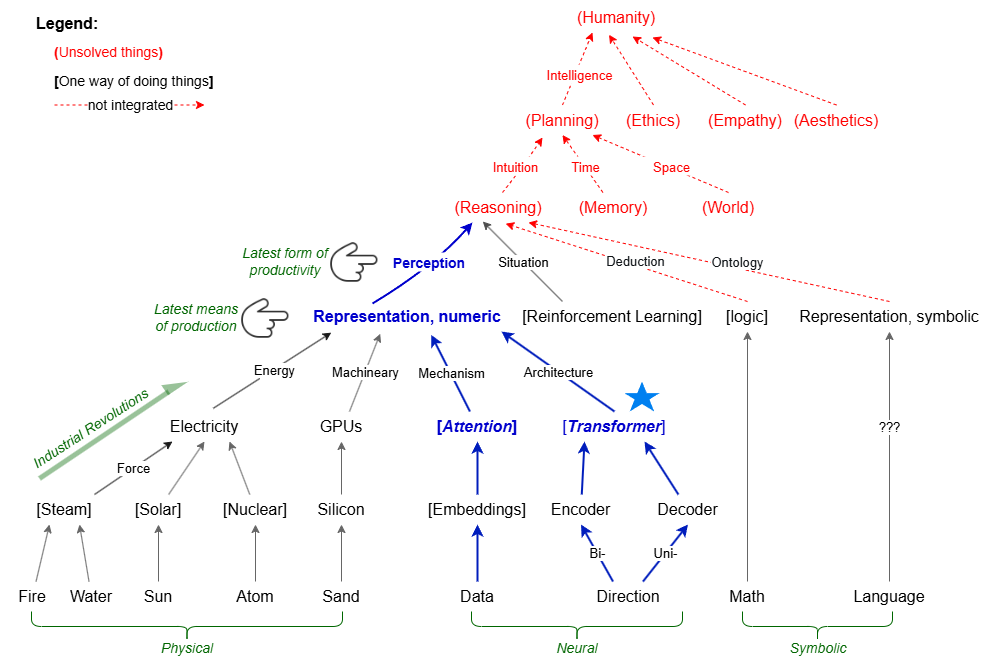

The embeddings, those vectors of numbers that’s flowing and morphing through the layers of all different shapes of deep neural networks, is the representation of phenomenon to a mechanistic mind. Note it’s just one kind of machine representations that’s working. I’m sure there are better ones out there to be found and the possible place to find them might lie outside of AI in cognitive science and philosophy.

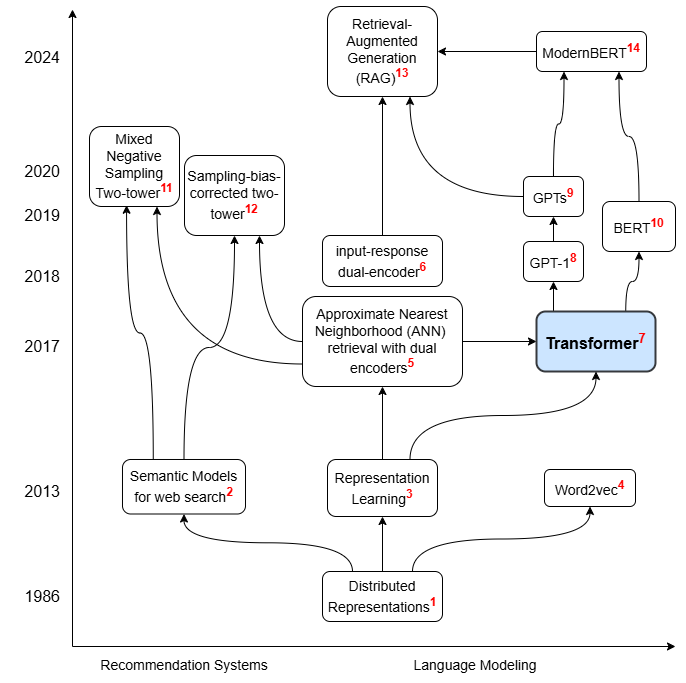

A two-hour literature review shows the history of representation learning in recommendation systems and language modeling, the two applications areas of AI that use up 99.7% of the data center capacity:

These papers should be a good start to leads to other papers: [1][2][3][4][5][6][7][8][9][10][11][12][13][14]

What’s so special about Transformer?

Transformer, the ‘T’ in ChatGPT and all those BERT variants, is the state of art neural architecture that’s behind all the AI innovations since its initial publication in 2017:

- It unleashes numeric representation of data, the next great power human invented after steam and electricity.

- It automates perception and simulates inverse perception (dream, a.k.a. image/video generation).

- But by itself, it’s incapable of automating reasoning. Don’t be fooled by the chain of “thought” and AGI talk solely based on LLMs.

- Due to its paradigm shifting way of doing AI and the steep learning curve, mastering it is the touchstone of modern-day AI talents and maturity of AI practice.

Click on the image to see the full-sized version and click “Back” button of your browser to go back to this page.

Transformer is just one way to get task specific machine representations. Machine reasoning is not solved yet. Machine simulated humanity is impossible. Given nothing else but a picture of Sam Altman and Mark Zuckerberg, you think machines can ever develop the kind of aesthetics which leads to human level first impressions: one is an evil person currently doing great things but the other is doing evil things right now but with the good will to do good?

AI Ab Initio vs AI Adjacent

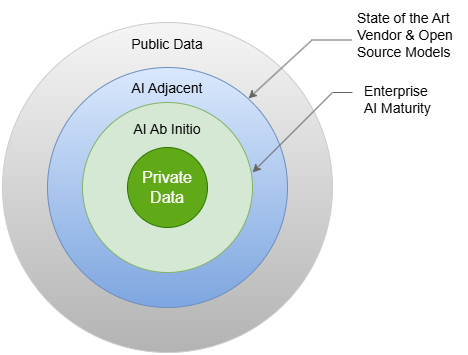

The most effective AI solutions cannot be bought. It must be built from first principles (Ab Initio) with private data. AI Ab Initio includes:

- Deep Neural Network (DNN) models which can ingest multi-modal raw data. Regressions and decision trees whose performances rely on feature engineering are outdated.

- Transformer, the special DNN architecture which learns context specific representations. All LLMs today are transformers. Attention is the algorithm Transformer uses to calculate representational embeddings.

- Reinforcement Learning, the most promising paradigm to move us closer from perception to intelligence.

AI endeavors not practicing the first principles are considered AI adjacent. They typically revolve around open source or vendor models which are not trained on private enterprise data. In the order of their proximity to AI Ab Initio:

- Fine-tuning. A pre-trained vendor or open-source model is fine-tuned with private data. As its name suggests, fine-tuning is limited to the capabilities of the pre-trained model. It’s unclear what’s the most effective and economic way to take full advantage of private data to allow for greatest architecture customization and adaptation to unique enterprise tasks, but fine-tuning is definitely not it.

- Retrieval Augmented Generation (RAG). Search is conducted on private data which is then used to guide a LLM in its content generation.

- Prompting. You structure your question to the LLM in specific ways that it generates better answers. Pure trial-n-error, alchemy in nature rather than science. “Ask ChatGPT” is not AI!

In a word, what those who I call “HuggingFace and ChatGPT data scientists” perform at work can at best be classified as AI adjacent. Without HuggingFace and ChatGPT, they can’t walk on their own legs. At the enterprise AI practice level, the more AI Ab Initio work it performs with its private data, the more mature the AI practice is and the closer it is to the state of the art AI:

The backdrop of the industrial revolutions

No matter how enthusiastically Sam Altman promises AGI is right around the corner and we’re in the Intelligence Age, do not believe him. What AI has achieved so far is merely perception. The gap between perception and intelligence could be exponentially wider than we thought.

| Industrial Revolution | Power Source | Rough Start | Characterization | Culminates In |

|---|---|---|---|---|

| 1st | Steam | 1760 | Coal, steam engine, mechanization of manual labors | Automatic Motion |

| 2nd | Electricity | 1870 | Combustion engine, telephone, car | Automatic Control |

| 3rd | Nuclear | 1970 | Electronics, computers, internet of things | Digitization |

| 4th (we’re here!) |

Information | 2000 | Internet, web, genome editing, ChatGPT | Automatic Perception |

| 5th | Intelligence | 20?? | AGI, Robots | Automatic Reasoning |

| 6th | Imagination[2] | ???? | AI/AR/VR powered simulation, precise gene editing and robotic actuation enable fast trial-n-error which eventually displace evolution | Intelligent Creation |

After the sixth industrial revolution, which culminates in intelligence creation, humans become God : )

The Third World of AI/ML

Failure to comply with any of the Eight Commandments automatically put you in the deeper end of the third world, but satisfying all eight commandments doesn’t necessarily elevate you in the Second or First world. The eight pillars must be sturdy, tall and balanced enough to create values (2nd world) and accomplish missions (1st world).

The courage to be

Transformer based AI started not long ago and majority of the enterprises are in the third world. Being in the third world of AI/ML is not something for an enterprise to be ashamed of. The ones who see and acknowledge the third world status have better chance of maturing up in the third world and developing into the second world.

Practically, the relative portions of AI Ab Initio and AI Adjacent work determines the maturity of an AI practice. An AI practice is considered 2nd World only after AI Ab Initio work dominates and sustains as a capability.

The enormous market

If you agree with the demarcation of the three worlds in AI, it’s needless to say where the market and money are. However, vendors in the first and second world, especially those innovative ones, don’t seem to see this. By way of exempli gratia, below are a few of my rants from the point of view of a third world AI practitioner:

- Although has better AI technologies, GCP should learn from AWS on their customer focus. AWS is not innovative at all but for money or love, they do listen to our pain points during our weekly meetings.

- Although has the talent and money to innovate, HuggingFace should focus on mundane things such as the formats, artifacts, community, platform and experiences, all geared toward the third world.

- You can’t beat Nvidia on LLM inference engine. Stop wasting your efforts on HuggingFace Accelerate.

- You didn’t add any value with your Generative AI Service (HUGS). It’s not much of a pain point and not hard to build ourselves but by adopting OpenAI API, you did miss the opportunity of developing an open standard on GenAI prompt interface, forcing vendors to adopt and eradicating the API incompatibility problem at the root.

The three grades of AI talents

Maturity of enterprise AI practice is determined by the collective talents of its AI practitioners. Parallel to the three-worlds division of enterprise AI practice, AI talent can be rated into three divisions:

- Top AI talents have the capability to conduct innovative research and development. They typically have formal education in math and computer science and are lifelong learners.

- Second-rate AI talents have the capability to solve business problems with state-of-the-art AI architecture and technologies. They typically have formal training in computer science and are lifelong learners.

- Third rate AI talents are the rest. They typically have no formal training but boast of tons of certificates, or have formal trainings that’s outdated due to their lack of lifelong learning.

Since individual talent is only one part of the collective whole of enterprise AI practice, different from the three-world division of AI practices, the three-grades division of AI talents:

- Considers experience as well as potential. A fresh graduate who implemented the annotated transformer in a class is considered more of a talent than a decade long experienced one who can’t grow beyond scikit-learn.

- Need to know only one or more rather than all of the Eight Commandments of AI.

Why formal education is such a big deal?

Compared to traditional ML which can be used as black-box tools out of readily available tool boxes such as Scikit-Learn, deep neural network architectured AI systems can only be built as a white-box. Each unique business problem with unique set of data calls for a unique layering and wiring of the white box. One needs to think in mathematical matrixes and program in AI domain specific frameworks laden with concepts from linear algebra and non-linear optimization to distributed computing. Formal knowledge in math and computing are a must to be productive in the field.

Compared to the slow pace of evolution of traditional ML, the speed and volume of twenty-first century intelligence age AI innovations are accelerating on an exponential scale. So does the noises from the greedy capitalistic gold diggers and the misguided dreamers. Sifting signal out the noises, seeing the path to business value and changing the status quo all requires not only the research skills one typically acquire through a STEM Ph.D. program, but also the curious mindset and the philosophical insights one either have or have not. I posit folks who went through the formal education process tend to have those.

The professionalization of AI

Akin to medicine and law, intelligence age AI is a profession. Although not regulated by bar or licensing examinations, formal education in math and computer science is a good indicator of successful AI professionals. No matter how smart a nurse is and how many surgeries she observed in the operating room, you want your open-heart surgery to be performed by a surgeon with a MD degree.

In addition to doctors and lawyers, there will be roles similar to nurses, medical equipment operators and para-legals in the profession of AI. Qualifications such as degrees, bars, residences and fellowships will be the sorting criteria among different roles.

The talent-world and vendor-world differential

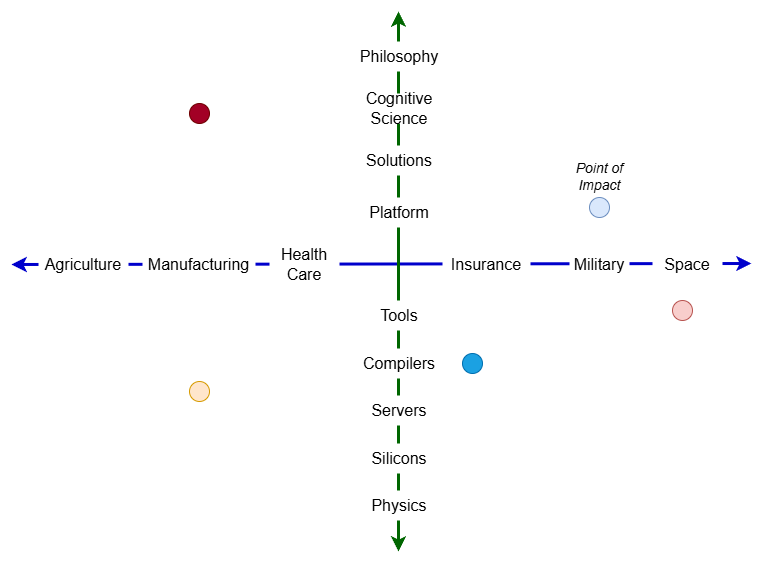

AI will eat the world, all industries that now and will exist. The AI profession comprises a deep stack of domains, from physics, silicon all the way up to cognitive science and philosophy. If you decide to career in AI, given your personal interests and talent, where is your optimal point of impact?

I encourage folks to consider maximizing impact rather than compensations while searching for your point of impact in AI. Even if you’re a top talent, do you really enjoy being a small cog in a big machine such as Google, or a pioneer making huge impacts in health care? Talent or vendor alike, if you want to have bigger impact, you might want to consider down-worlding to work for or cater to. I for one, a barely second-rate talent, am enjoying making great impacts in the third world : )

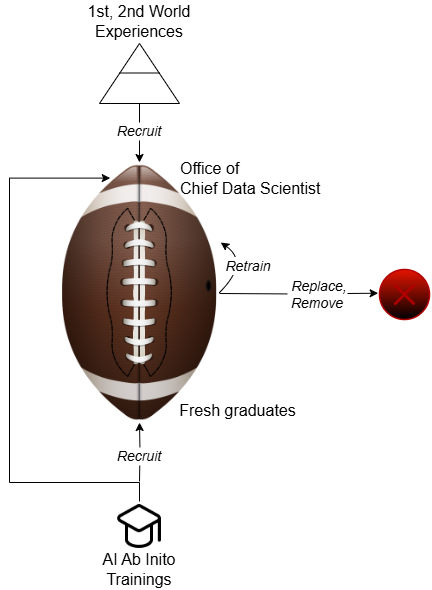

Chief AI Scientist

Many third-world enterprises have created the role of and appointed Chief AI Scientist, an experienced generalist AI professional who perform below functions:

- Follow the evolutions and revolutions of the SOTA AI. Craft AI strategy that maximizes business impacts by stipulating clearly what to do and what not to do.

- Inform, educate and secure the buy-in of said strategy from stake holders from AI practitioners to business owners and top executives.

- Ensure execution of the said strategy by training, auditing quality and progress of work and holding AI practitioners accountable.

The chief AI scientist must have real life experiences in AI ab initio, must be an external hire ideally from the first and second world of AI. There should be plenty of frontline AI leaders in the big tech who would accept a smaller pay package in exchange for the leadership role and work life balance in the Third World.

Recruit, Retrain, Replace, Remove

With the right AI leadership in place in the “lean not mean” office of the Chief AI Scientist, AI talents refresh in the Third World may begin in earnest:

- Recruit recent fresh graduates majoring in AI or related fields. They’re trained in state-of-the-art AI at school and are ready to conduct AI ab initio kind of work from the get-go.

- Retrain existing AI talents in AI ab initio skills such as deep neural network and reinforcement learning.

- Vendor-led training in AI adjacent topics such as “GenAI with AWS” are waste of money.

- AI ab initio knowledge can be cross-trained disseminated internally by domain experts.

- AI ab initio training can be evaluated by completion of projects or thesis defense style presentations to a peer committee.

- Replace those AI talents who cannot make progress in picking up AI ab initio skills.

- Remove AI roles that don’t add value.

The end goal, however long it takes, is for everyone who has “AI” in their title or org name to have appropriate levels of knowledge and skills in state-of-the-art AI.

Notes

[1] SAS Institute and MathWorks are two examples of the unaligned. Both are still leaders in their niche, both still enjoy broad customer base, both missed the AI/ML wave and both refuse to abandon their proprietary technologies and align with AI/ML industrial standards in their catch-up game.⤴

[2] My adoption of the word “Imagination” doesn’t come from Imagination Age, but rather it’s from Owen Barfield’s concept of the final participation which features an imaginative soul. ⤴

Reference:

- Experimentation: PlanOut | Experimentation for Engineers | Online Controlled Experiments

- Before LLM, recommendation system accounts for 80% of workload in one Meta data center